If you find an error of any kind on this page, or if you need an answer that is not here, or if the

explanation given here is insufficient, I am happy to help. Please send me an email request with

"Q&A Book" in the subject line using timcrack@alum.mit.edu.

The individual letter solutions to all Q&A Book Questions appear on pp. 269/270 of the Q&A book and also appear online at http://www.foundationsforscientificinvesting.com/TIIQ7-MC-ANSWERS.pdf (for any files at the web site that are password protected, please look up "password" in the index of FFSI or Q&A Book).

The answers below are numbered using the numbers in the 7th

edition of the Q&A Book. If, however, a question also appeared in the 6th edition, then that question number is given alongside the 7th edition number. For example, "Q2" gives the solution to Question 2 in the 7th and 6th editions, but "Q161/Q133" gives the answer to Q161 in the 7th edition (which is also Q133 in the 6th edition). If a Q&A book question appeared in the University of Otago FINC302 Mid-Term exam in 2020, then the MTQ number is given also. For example, Q140/2020MTQ5 gives the answer to Q140 from the 7th edition of the Q&A book (which also appeared as Q5 of the 2020 FINC302 Mid-Term exam).

Quoted page numbers are from the 10th Edition of

Foundations for Scientific Investing: Capital Markets Intuition and Critical Thinking Skills

(ISBN 978-0-9951173-6-5, December, 2020) (FFSI) unless otherwise indicated. Pages numbers in the 13th edition of FFSI (February 2024)

are roughly the same as for the 10th edition up to about p. 190, and then slowly deviate, with an additional 21 pages added by the

time you get to the end..

- Q2 Thank you for your question. I am happy to help. The KiwiSaver account earns 6% per annum. You must draw a time line here. There is no growing annuity here. It is a level ordinary annuity. My advice is to use PVA (present value of annuity) and add it to the lump sum at t=0, then compound everything forwards 65 periods.

I get PV=lumpsum+PVA=$2,000+(C/r)*[1-1/(1+r)^N]=$2000+(1,000/0.06)*[1-1/1.06^16]=$12,105.895.

Then FV=PV*(1.06)^65=$534,414, answer (c)

- Q3 This matrix dimension question runs us all the way back to Section 1.2.2 of FFSI, which was there to prepare you for the Markowitz efficient set mathematics in Section 2.6.4.

Anything with a little arrow symbol (called "\vec") is a column vector. It has dimension Nx1 let us say. N=20 here.

Anything with a little ' symbol had been transposed. So this swaps rows for columns.

As discussed on pp. 27-28, if you multiply two matrices, their inner dimensions must agree, and their outer dimensions tell you the size of the outcome. This is true for three matrices multiplied together.

So, A=(\vec i)'V^{-1}(\vec mu) is a (1xN) times an (NxN) times a (Nx1). The inner Ns agree, and the outer 1x1 tells us the size. Alternatively, you may simple recall that A was the 1x1 calculation I did for you to get you started on Q3.2.2 (Active Alpha Optimization; p. 254 Q&A Book).

(\vec H) and (\vec h_P) both have the little \vec symbol. So that are both Nx1 column vectors.

So, the answer is 1x1, Nx1, Nx1. With N=20 here.

- Q4 The answer to this appears in the Quant Quiz in the box on the bottom of p. 29 of FFSI. Remember that variance is of form h'Vh, so standard deviation must be the square root of that form.

- Q6 This numerical derivative question is similar to the Quant Quiz on p. 33 of FFSI, using the table on p. 35 of FFSI. The worked solution is on p. 34.

To calculate a numerical derivative, all you need are numerical values of the function. These values can come from a table of values, or these values can come from evaluation of the function.

In this case, note first that you can completely ignore the written function erf(x)=... because you have a printed table of numerical function values. So, you do not need to use the given formula for anything. If, however, there was a formula (presumably much simpler), but no printed table, then you would take the same approach as follows, but in that case you would use the formula (like 2020MTQ10).

So, in this case, I can just ignore the formula and look at the table. We need to find the slope, which is the first derivative. That is just slope=[f(x+h)-f(x)]/h for small h. We are asked for this at x=6.

Let me choose x=6 and h=0.01 (the smallest step from x=6).

I get slope=[f(x+h)-f(x)]/h=[f(6.01)-f(6)]/0.01=[0.9301596-0.9316814]/.01

=-0.0015218/0.01=-0.15218, answer (b).

This matters because we need to understand how Excel Solver is calculating slopes. We saw, for example, in

MIN-VAR-OBJ-REVISED.XLS that Solver calculates slopes using a very small step size, and it can be sitting at the minimum, but thinking it is at the maximum, because the slope is zero in all directions if you take only very small steps. This matters in

Q3.2.2 (Active Alpha Optimization; p. 254 Q&A Book)

- Q9 Student-t test of the mean. This is a review of our extended discussion of how to build a t-test of the mean in Section 1.3.16 and in the COCO spreadsheets*). It is also tied in with discussion of the general form of a t- or Z-test in Section 1.3.14. So, review those, ultimately leading you to specific Equation 1.55. Remember that every t-test has a slightly different form: t-test of mean is (sample mean-null hypothesis value (0 here))/(s/sqrt(N)), where s is sample stdev. We are using Equation 1.55 from p. 100 of

FFSI:

t=[Xbar - 0]/[sigma/sqrt{N}]

=[0.00064-0]/[0.013308/sqrt{500}]

=1.0753558, answer (b)

*COCO Spreadsheets:

- Q10 Student-t test of correlation (in this case auto-correlation). Tied in with discussion of general form of the test in Section 1.3.14, ultimately leading you to specific Equations 1.43 and 1.44 (latter equation only to be used in case where N is big and correlation is small). Like Q9, remember that every t-test has a slightly different form: t-test of correlation is (sample correlation-0)/[sqrt(1-correlation^2)*(1/sqrt(N-2))], or approximately (sample correlation-0)/(1/sqrt(N)), if correlation is small and N is big. One student asked me how to use the Yule factor here, but Q10 does not use a Yule factor. The Yule factor is used for adjusting the t-test of the mean, not the t-test of the autocorrelation.

See p. 107 of FFSI for discussion of this point.

Let us use the approximate formula first (Equation 1.44; it follows algebraically from the exact equation when the correlation is small and N is big):

t= (sample correlation-0)/(1/sqrt(N))=0.056773/(1/sqrt(500))=1.269 (answer (c))

Now let us use the exact formula (Equation 1.43)

t=(sample correlation-0)/[sqrt(1-correlation^2)*(1/sqrt(N-2))]=0.056773/[sqrt(1-0.056773^2)(1/sqrt(498))]

=1.269 (answer (c))

- Q12 Like Q6, note first that you can completely ignore the written function f(x)=... because you have a printed table of function values. To calculate a numerical derivative, all you need are numerical values of the function. These values can come from a table of values, or these values can come from evaluation of the function. So, you do not need to use the given formula for anything. If, however, there was a formula (presumably much simpler), but no printed table, then you would take the same approach as follows, but in that case you would use the formula (like 2020MTQ10).

A student asked me "In class the example function was x^3, and I can understand how this works. However in Q12 I am specifically confused with the function which is a much more complicated, function: f(x) = sin(x ∑ pi)-Gamma(x,pi) where Gamma(x,pi)Ö Iím aware that the process is the same, so I only have difficulty with applying the steps to the more complicated functions of x." The student was mixing up two concepts. This is a valuable mistake because we can all learn from it. Q12 asks for an estimate of the derivative to this function using numerical techniques. You are not looking for the exact analytical derivative.

We did not estimate the derivative for x^3 in FFSI; we found an exact analytical derivative in that case. These are two different concepts. Look at the quant quiz "first derivative"

bottom half of p. 33 of FFSI for an estimated derivative using numerical techniques. To find slope, you evaluate the function at two values very close together, and divide by the step between them: [f(x+h)-f(x)]/h. In this case, we are finding the slope at x=7, so I used I used

slope=[f(x+h)-f(x)]/h

= [f(7.01)-f(7.0)]/0.01.

= [-0.996766- (-0.965099)]/0.01

=-0.031667/0.01=-3.1667, answer (a)

Ask again if not clear.

- Q13 You can remove the words "small-cap" and "large-cap" from this question, if you wish, and the answer does not change. The only reason I included these was for emphasis. On pp. 105-106 of FFSI,

I told you that small-cap stocks require large samples (e.g., N=200+) and large-cap stocks require smaller samples (e.g., N=100+) in order for the central limit theorem to overpower the non-normality of underlying data and allow the t-statistic for the mean to be valid. By stating the market cap, I was emphasizing that 30 is definitely too small and 500 is very likely big enough.

- Q14 Oh, that's interesting. When you see prices bouncing like this, they are bouncing between the bid and the ask (see pp. 214-215 of

FFSI). This is a US stock, supported by a market maker, but we see similar patterns in NZ stocks. B, D, and F look like a customer buying at the ask price (think of it as being like a naive market order to buy hitting the ask in the NZX CLOB). Trades A and G look like a customer selling at the bid price (think of it as being like a naive market order to sell hitting the bid in the NZX CLOB). I cannot tell what trade E is; I would have to go back the Bloomberg terminal and change the little box that says "Trade" to "Bid" or to "ask" to see whether that was the bid or ask. Trade C must be a case of US price improvement. The only answer I like is Answer (b).

- Q15 Using the same logic as Q14, none of these answers makes sense. It must be (e).

- Q16 Yes, the bid-ask spread is $0.01 wide when trades A, B, C, and D occur. That's just the distance between the bid and the ask. I had to read the $0.01 off the vertical scale. Yes, the bid-ask spread is $0.01 wide when trades F and G occur. Yes, Trade C likely involves price improvement. Yes, we cannot immediately tell whether trade E was a customer buy or customer sell, because it is not obvious whether trade E takes place at the ask price just after the market maker dropped the level of the spread from 2.06-2.07 to 2.05-2.06, or whether trade E takes place at the bid just before the market maker dropped the level of the spread from 2.06-2.07 to 2.05-2.06. So, answers (a), (b), (c), and (d) are all true. I was looking for a false answer, so I am left with answer (e).

- Q17 I told you in Chapter 1 (Figure 1.18 p. 88) it would be non-linear, and we analyzed the relationship using two correlations: PPMCC (which assumes a linear relationship) and SROCC (which assumes generally increasing or generally decreasing relationship, but not necessarily linear). Answer (a) says OLS, but that assumes linearity, and the R^2 tells us how close to linear it is. Answer (b) says look at the PPMCC, but that assumes linearity (and PPMCC^2=R^2 for that reason). Answer (c) is just a rescaled version of Answer (a). If it wasn't linear in (a) it's not linear in (c). So, I think I would plot the data, and maybe use SROCC (if it were generally increasing or generally decreasing). In practice, in larger samples, stdev of returns tends to fall with rising market cap, but our 21-stock sample is too small to reveal it confidently.

- Q18 We are using Equation 1.55 from p. 100 of FFSI:

t=[Xbar - 0]/[sigma/sqrt{N}]=[-0.000327-0]/[0.017007/sqrt{500}]=-0.429937, answer (c).

- Q19 Student-t test of correlation (in this case auto-correlation). Tied in with discussion of general form of test in Section 1.3.14, ultimately leading you to specific Equations 1.43 and 1.44 (latter equation only to be used in case where N is big and correlation is small).

Let us use the approximate formula first (Equation 1.44): t= (sample correlation-0)/(1/sqrt(N))=-0.092763/(1/sqrt(500))=-2.074 (very close to answer (b))

Now let us use the exact formula (Equation 1.43):

t=(sample correlation-0)/[sqrt(1-correlation^2)*(1/sqrt(N-2))]=-0.092763/[sqrt(1-(-0.092763^2))(1/sqrt(498))]=-2.079 (answer (b)).

- Q20 This takes us back to conformal matrix multiplication examples on p. 27/28 of FFSI. A matrix has dimensions 2x3, for example, if it has 2 rows and three columns, like matrix B in Equation 1.4 on p. 28 of FFSI. If you multiply a (kxn) matrix by an (nx1) matrix, then the two matrices are conformal. That means that their inner dimensions (n here) are the same. If you multiply two matrices together, you know the size of the answer by looking at the outer dimensions. So, for example, if you multiply a (kxn) matrix by an (nx1) matrix, the answer is of dimension (kx1). There are two ways to answer this question. First, an informed answer with no real algebra involved. Second, a tedious step-by-step approach where we work out the dimension of each item in each multiplication, and then combine dimensions using the rules for conformal matrix multiplication just mentioned.

- First approach (my favorite): The first term [(mess)V^{-1}(mu - Rfi)] is named in the question as hp with a little vec symbol on it. So, we know that symbol means it is an Nx1 column vector. N=20 here, so this must be 20x1. The second term [V^{-1}(mu - Rfi)] is just pulled from the first term. The item I called (mess) must be a 1x1 scalar because mu_P and RF in the numerator of that mess are 1x1. You can always multiply a vector or matrix by a scalar, so in the expression [(mess)V^{-1}(mu - Rfi)], the first multiplication (mess)V^{-1} is scalar multiplication (which would just use "*" in Excel) but the second multiplication V^{-1}(mu - Rfi) is matrix multiplication (which would use MMULT in Excel). So, given that this "(mess)" term is just a scalar, the [V^{-1}(mu - Rfi)] term must have the same dimensions as the full [(mess)V^{-1}(mu - Rfi)] term, that is, 20x1. Giving answer (d).

- Second approach (more tedious, and not really needed): the first item in square brackets looks like [(mess)V^{-1}(mu - Rfi)] We know that V is always the (NxN) square variance-covariance matrix. We know that mu here is an (Nx1) column vector because that's what the little vec symbol on top means, and the same for the i term. The item I called (mess) must be 1x1 because mu_P and RF in the numerator of that mess are 1x1. So, we have (1x1)(NxN)(Nx1). That looks like it is non-conformal because the inner dimensions (1x1)(NxN) do not agree, but, that's scalar multiplication there. Like this: =(1x1)*MMULT(NxN,Nx1). That is, the first (mess) term is just a 1x1 number that multiplies everything that follows. So, we need only look at the dimensions of the MMULT(NxN,Nx1) term, which we get from the outer dimensions: Nx1. The second term [V^{-1}(mu-RFi)] is just like what we just worked out, for the same reasons: Nx1. N=20 here, so, I am looking for 20x1 and 20x1, answer (d).

- Q22 You have Equations (1.43) and (1.44) (the first two correlation t-statistics formulae on p. 95 of the book). The denominator is the standard error estimate. The first equation (1.43) yields sqrt{1-rho^2}/sqrt(N-2)=sqrt(1--0.04^2)/sqrt(498)=0.044775, and the second equation (1.44), which is an approximation, yields 1\sqrt{N}=0.04472. I did not know which you would use, so I asked for an answer "close to" my given number. Keep in mind, from Equation 1.40 in Section 1.3.14 ("Intuition for General Functional Form of Z and t Tests") that Z and t tests always have the form [parameter-null value]/[standard error]. So, you can always pick the standard error out of the denominator.

- Q23 (see also Q46) You can almost always reject normality of returns for stocks (because of fat tails and peakedness in the distribution, relative to the shape of a normal distribution with the same mean and variance). So, we are looking for a number at the high end of the scale. 90% is the BEST answer here, though in the Otago University 2020 dataset, my students rejected normality in 100% of their stocks.

- Q24 Mean blur implies that you often cannot reject the null hypothesis that the mean return is zero. You can, however, reject it sometimes. So, I am looking for an answer that is small, but not zero. Answer (d) says a "small proportion". So, that is the best answer. In exercises in 2020, my students could reject the mean return of zero in six out of 21 stocks. This was an unusually high proportion and is attributable to a roaring bull market, with low volatility (during your sample period).This is not the normal state of affairs. In normal times, you would reject the mean of zero in maybe 2-3 stocks out of 21.

- Follow up student Question: You said that we "often cannot reject the null hypothesis that the mean return is zero." I do not fully comprehend this, so does it mean that the mean return is zero?

- Answer: No, it does not mean that the mean return is zero. Mean blur is when the mean return is a small number relative to the standard deviation of returns. In exercises that my students did in 2020, they saw that the average ratio of standard deviation of returns to mean returns in their 21 stocks was about 50. That is, the variability of returns is very high compared with the mean return. That is, a t-statistic for the mean will often be a small number (because it has mean in the numerator and standard deviation in the denominator). Sometimes the mean return on a stock is positive, sometimes the mean return is near zero, sometimes the mean return is negative. The problem with the high standard deviation of returns on stocks is that it is difficult to distinguish between these cases, because there is so much variability in the data. This means that even if the mean return is positive, we often cannot reject the null hypothesis H0:mu=0.

- Q25 We discussed this on p. 80 of FFSI. We declared that the correlation would be in the region of 95% to 99%, more or less. Answer (b) is the only answer that comes close. Answer (a) is not sensible; these correlations cannot be bigger than 1. If the correlation were exactly 1 (a student asked), that would mean that a plot of P(t-1) versus P(t) is a perfect straight line. There is an interesting mathematical reason why you cannot have that and the correlation would not be defined. Ask me some other time.

- Q27 The t-test of the mean has three assumptions: normality, independence, and identical distributions for the underlying data. Yes, answer (b) is correct. One month is about 21 observations. A sample of 21 is not enough to enable the CLT to kick in and override the effect of the significant non-normality (see pp. 105-106 of FFSI). The small adjustment using the autocorrelation coefficient is not about correcting for non-normality. It is about correcting for auto-correlation, which is one form of dependence. It is discussed on p. 107 of FFSI. It does not apply here.

- Q28 Student-t test of the mean. This is a review of our extended discussion of how to build a t-test of the mean in Section 1.3.16 and in the COCO spreadsheets*. It is also tied in with discussion of the general form of a t- or Z-test in Section 1.3.14. So, review those, ultimately leading you to specific Equation 1.55. Remember that every t-test has a slightly different form: t-test of mean is (sample mean-null hypothesis value (0 here))/(s/sqrt(N)), where s is sample stdev. My students had a question on this in their 2020 Mid-Term exam (2020MTQ20). We are using Equation 1.55 from p. 100 of FFSI:

t=[Xbar - 0]/[sigma/sqrt{N}]

=[0.001436-0]/[0.0181796/sqrt{500}]

=1.76626, answer (c)

*COCO Spreadsheets

- Q29 Student-t test of correlation (in this case auto-correlation). Tied in with discussion of general form of test in Section 1.3.14, ultimately leading you to specific Equations 1.43 and 1.44 (latter equation only to be used in the case where N is big and correlation is small).

Let us use the approximate formula first (Equation 1.44):

t= (sample correlation-0)/(1/sqrt(N))=0.0252561/(1/sqrt(500))=0.5647 (answer (d))

Now let us use the exact formula (Equation 1.43)

t=(sample correlation-0)/[sqrt(1-correlation^2)*(1/sqrt(N-2))]=0.0252561/[sqrt(1-0.0252561^2)(1/sqrt(498))]=0.5638 (answer (d))

- Q30 Interesting. I showed this picture of NZX relative spreads during the trading day in my classes in 2019 and 2020. The PPMCC is for linear relationships. The relationship has been described as U-shaped. So, that is not linear. So, the PPMCC is not going to capture the full relationship. The SROCC is for monotonic relationships. That is, relationships of a mono (i.e., single) tone (i.e., manner). The SROCC is for generally increasing or generally decreasing relationships. The relationship has been described as U-shaped. So, that is not a single manner. It is neither generally increasing, nor generally decreasing. So, the SROCC is not appropriate either. What you really need to do is fit something like a parabola to it, and then use non-linear least squares to see how good the fit is. This is not something we have talked about.

- Q31 See also Q40 and Q125. The ratio of variances is an F-test, as long as the samples (and thus their variances) are independent, and the underlying data are independent and identically normally distributed. We looked at the F-test on p. 59 (constructive demonstration applying very generally), on p. 63 (relationships between distributions summarizing the previous), p. 103 (specific construction corresponding to this question, following on from part of the t-test construction).

- Q35 This one is very similar to the Quant Quiz on p. 96 (with worked solution there). We have a t-statistic formula Equation 1.43 but I do find it messy to work with. So, given that the correlation is relatively small, I think the sample size will have to be big for it to be significant. So, let us instead use the approximation formula Equation 1.44. That one says t=rho/[1/sqrt(N)], where "rho" is the correlation. That simplifies to t=sqrt(N)*rho. That will be much easier to work with.

Let me point out a sneaky way to do this without any algebra. Just use t=sqrt(N)*rho directly, and try N=500 (answer a), then N=1400 (answer b), until you find the answer! Answer (a) was too small, but (b) did the job. Then you are done.

...but by long hand, when N is large, the t distribution looks like a standard normal distribution. So, a test significant at the 5% level means the t-stat will be 1.96 (plus or minus).

So, I am going to solve 1.96=|t|, for N, where |t| is absolute value.

Algebra: 1.96=|t|=sqrt(N)*|rho| implies that sqrt(N)=1.96/|rho|=1.96/0.0532845=36.78. Square both sides to get N=1,353. Rounding up to the nearest 100, I get 1,400, answer (b).

- Q37 Delta = the derivative of option price with respect to stock price. Or, in other words, Delta = the slope of the option pricing function. So, you need to estimate slope. Q6 and Q12 are similar, though without the option pricing context. You evaluate the function at two values very close together, and divide by the step between them: [f(x+h)-f(x)]/h. In this case, I used [f(5.01)-f(5.0)]/0.1. In this exercise you have a table to give you option prices. This is discussed on p. 33 and p. 34 of FFSI. Please review FFSI and try again. Just like Q6 and Q12. This time I did not give the function, but I can tell you it looks like the Black-Scholes formula for a European-style put option to me. I need to evaluate the function at two values very close together, and divide by the step between them: [f(x+h)-f(x)]/h. In this case, we are finding the slope at x=5, so I used I used

slope=[f(x+h)-f(x)]/h

= [f(5.01)-f(5.0)]/0.01.

=[0.2720-0.2766]/0.01

=-0.0046/0.01=-0.46, answer (b).

- Q39 This is a guess really, based on our observation that having perfect foresight 1% of the time added 1% per annum to our rate of return. So, 50.5%-49.5% is a 1% of the time advantage.

- Q40 F-tests appear on p. 103 of FFSI, building upon the earlier work in the t-stat argument (and also the ping-pong ball argument on p. 59). So, all you need is a ratio of sample variances: F=0.025^2/0.015^2=2.78

- Q42 Read the answer to Q20 before trying this. As argued in Q20, hP with the vec symbol is an Nx1 column vector (that's what the vec symbol means here). So, we immediately know that hP= [(mess)V^{-1}(mu - Rfi)] must be Nx1 (looking at the left-hand side and completely ignoring the right-hand side), and N=20 here, so [(mess)V^{-1}(mu - Rfi)] is 20x1. As argued in Q20 [(mu - Rfi)V^{-1}(mu - Rfi)] is [(Nx1)'(NxN)(Nx1)]=[(1xN)(NxN)(Nx1)], remembering that the transpose of that first term swaps rows and columns. Then the dimension of this can just be read off the outer dimensions: (1x1). So I get answer (a).

- Q43 Contrast this question with Q4. The Tobin frontier is obtained by investing in the risky assets plus investing in the riskless asset.

For the Tobin frontier, the vector hP(i.e., the weight of the investments in the risky assets), need not add to 100%. For example, if you put half your money in the risky assets and half in the riskfree asset, then hP adds to 50% and hP alone does not determine your return. Because half you money is in the riskless asset in this case, you need to bring RF into it to account for the return from the part of your money in the riskfree asset.

So, for the Tobin frontier we have mean = hP' mu + (1-hP'i) RF. How do you read that? Well hP' mu is the return from the risky assets, as usual, and hP'i is the vector of portfolio weights times that vector of ones we used Q3.2.1 (Markowitz; p. 251 Q&A Book) and Q3.2.2 (Active Alpha optimization; p. 254 Q&A Book); All that hP'i does is add up the weights. So, for example, suppose there are four stocks, and suppose hP=(0.25 0.25 0.00 0.00)'. Then you have 25% in stock 1, 25% in stock 2, nothing in stock 3, and nothing in stock 4 (the other half of your money is assumed to be invested in the riskless asset). Then mean =hP' mu + (1-hP'i) RF is given by [(0.25*mu1)+(0.25*mu2)+(0.00*mu3)+(0.00*mu4)] +[(0.25*1)+(0.25*1)+(0.00*1)+(0.00*1) ]RF which yields (0.25*mu1)+(0.25*mu2) +0.50*RF. That is, your mean return is 25% times the mean return on stock 1, plus 25% times the mean return on stock 2, plus 50% (the balance of your investment) times the return on the riskless asset. That seems to make sense if 2% of our money is in stock 1, and 25% of your money is in stock 2, and 50% of your money is in the riskless asset.

Note that in the special case where the Tobin Frontier portfolio P=T (i.e., the Tangency Portfolio),

the portfolio is fully invested. In this case only, the RF component does not appear. In this case only,

Answer (a) is correct. Otherwise, Answer (a) is false.

TEST: What if RF=0? Is Answer (a) correct all the time then? I hope you answered "no."

- Q45 (see also Q83) I built this question purposely because many students (you are not alone by any means!) were confusing two different concepts. Let me explain and add another comment.

First, if there is significant excess kurtosis (and there often is!) then your data are not normally distributed. End of story. Sample size is irrelevant. Either the data are normally distributed or not normally distributed, and significant excess kurtosis (or significant skewness) is enough to reject normality. So, answer (b) is correct.

Second, the Student-t test of the mean is built on three assumptions: your data are normally distributed, your data are statistically independent of each other, and your data are identically distributed. I can prove, however, using some probability theorems and two pages of detailed algebra, that if you have a large enough sample, say over 200, then the first assumption is no longer needed, as long as the other two assumptions hold. That is, even if your data are not normally distributed, you can still compare your calculated Student-t statistic for the mean to the Student-t tables.

Note that in neither of the above cases does anything happen that makes the data normally distributed. So, answer (d) is not correct, although the second half of the sentence in answer (d) is correct in the case of a Student-t test of the mean, which is not mentioned anywhere in the question.

Let me add one other comment. In the case where the sample size is large, the Student-t random variable behaves like a standard normal random variable. So, when conducting a t-test in a large sample, you can just compare your test statistic to Z (i.e., standard normal) tables.

- Q46 (see also Q23) You can almost always reject normality of returns for stocks (because of fat tails and peakedness in the distribution, relative to the shape of a normal distribution with the same mean and variance). So, we are looking for a number at the high end of the scale. Answer (a) says "most if not all, say nine or 10" and is the BEST answer here, though in the Otago University FINC302 2020 dataset, my students rejected normality in 100% of their stocks. Q53 was about mean blur, but Q46 is about non-normality, and especially kurtosis. My students tested for normality in 2020 using 21 stocks and rejected normality for every one of the 21 stocks. The rejections were very strong, and driven mostly by excess kurtosis (i.e., peakedness and fat tails relative to a normal distribution with the same mean and variance). This is a standard result in finance. We very often reject normality of returns. So, the answer must be (a), we will reject normality for most, if not all, of them. Figure 1.16 on p. 71 of FFSI is a nice illustration of this; keep in mind, however, that I truncated this figure. That is, although you can see the peak near zero (caused by lots of small returns during calm periods) the figure actually extends to about 11% or 12% on the right-hand side and to -20% on the left-hand side (i.e., fat tails with extreme events).

- Q47 I use the approximation formula Equation 1.44. I get t=rho/(1/sqrt(N))=0.0668/(1/sqrt(504))=1.4996. That leads me to answer (b).

- Q51 This ties in with discussion of correlation and prediction on p. 80 of FFSI "Correlation Example with Actual Stock Prices". The short answer is that you can predict prices really well using lagged prices (so, b = 1, roughly), but that's not where the money is. You cannot predict returns easily at all (so, d = 0, roughly), and that is where the money is.

- Q53 is about mean blur. Out of every 10 stocks there are usually only "a few" for which the mean return is significant, given the high variability. Note also that this is about the first and second moments (mean and variance) and has little or nothing to do with the third or fourth moments (skewness and kurtosis).

- Q54 is about p. 80 of FFSI. P(t-1) is a very good predictor of P(t). You can test this yourself using prices (without any missing observations). P(t-1) is such a good predictor of P(t) that the R^2 is going to be very close to 1. Ask yourself, if I tell you that Microsoft (MSFT) closed at $187.74 per share this morning, what is a very good guess of where it will close during the next trading session? Well it could go up a little, and it could go down a little, so, on average, over time, if you guess that its next closing price is the same as its most recent closing price, on average you will be very close to being correct. A price process where your best guess of tomorrow's price is today's price is called a "martingale," which you may come across in FINC306 (i.e., our derivatives class).

- Q56 (see also the Quant Quiz on p. 108 of FFSI) We know it is chi-squared because it is independent squared standard normal terms added up. There are 45 such independent terms, so there are 45 degrees of freedom. This follows directly from the definition of a chi-squared random variable given on p. 58 of FFSI.

- Q62 Look at Figure 1.7 on p. 42. You can see that that little hatched rectangle of width wi is only an approximation to a lump of probability mass (i.e., you can see those corners at the top are not meeting the density function). In fact, you can see that wi is, maybe, 5mm wide in the figure. That is, wi is non-infinitesimal. When the integral is written with the "dx" in it, then yes, that "dx" is an infinitesimal quantity. When the integral is written as an approximate summation involving, in this case, heights hi and widths wi of little boxes, and values of xi, then that little width wi is not infinitesimal. That's why that summation is an approximation to the value of the integral. The key is that as we let wi go to zero (so the rectangles get narrower and the count of them increases), then in the limit as wi goes to zero, our approximation becomes more and more accurate. So dx is the limiting value of wi, when wi has gone to zero.

- Q63 (see also Q113 andQ134/2020MTQ22) Load up the spreadsheet

Q63-Q113-20200610-REVISED.xlsx

and hit F9 a few times to watch the simulation. I used Equation 2.2 (p. 153 FFSI(14th Ed.); geometric Brownian motion RW)

to generate the continuously compounded returns (CCR) r(n)=mu*tau+sigma*sqrt(tau)*z(n)

(with tau=time step) and then I used P(n+1)=P(n)*exp[r(n)] to generate prices. With correlation questions like this, always

go back to basics. The correlation is the covariance divided by a product of standard deviations,

like in Equation 1.41 on p. 96 of FFSI(14th Ed.). The standard deviations are non-negative. So, the correlation takes its

sign from the sign of the covariance in the numerator. So, it comes down to the sign of the covariance.

Now go back to basics again. The covariance is given by Equation 1.36 (p. 84 FFSI(14th Ed.)). It is a product of moments.

So, the sign of covariance is determined by whether the two terms (X minus mean) and (Y minus mean) have the same sign or

different sign. If both random variables X and Y tend to be above their means at the same time, and below their means at the

same time, then you get positive*positive and negative*negative, both of which yield a positive covariance and thus a

positive correlation. If, however, X tends to be above its mean when Y is below its mean, and vice versa, then you

get positive*negative and negative*positive, both of which yield a negative covariance and thus a negative correlation.

The description of prices and returns in this question is enough to deduce the signs here, and you can see it

in action in the spreadsheet simulation.

- Q64 Kurtosis is both peakedness and fat tails relative to a normal distribution. Figure 1.16 shows that there are many more small returns (i.e., peakedness) and many more tail events (i.e., fat tails) than in a normal distribution with the same mean and variance. Table 1.7 shows that there are many more tail events than in a normal distribution with the same mean and variance (e.g., 2020:Q1). Table 1.8 shows that there are many more small returns than in a normal distribution with the same mean and variance (e.g., as experienced during all of 2017). Discussion in the nearby text on pp. 68-73 of FFSI gives further details.

- Q67 The t-test of the mean tests a hypothesis about the value of mu, the population mean. The null hypothesis is often H0:mu=0. This is not a test of whether the data are normally distributed or not. It is only a test of whether mu=0 or not. If I reject H0:mu=0, then maybe the data are normally distributed, but with a higher mean than I thought. If I want to test normality, I use a Z{skew} or Z{kurt} or JB=Z{skew}^2+Z{kurt}^2 test, but there is no information about testing skewness or kurtosis here, so there is no information about normality. So, the answer is (d).

- Q73: I had forgotten about Corrections Corp. Their stock has done about 40% worse than the S&P500 over the last year (to May 6, 2020), but with a lot of stock-specific risk. The key here is to understand correlation and R^2. First of all, R^2 = correlation^2. So, correlation = -0.80 implies R^2=0.64. That knocks out two possible answers. Next, the sign of the correlation is the same as the sign of the slope. So, negative correlation means downward sloping. That knocks out one more answer. Lastly, correlation of -0.80 is quite large, and so is R^2=0.64. So, the line of best fit is going to be clear. Only if correlation = 0 would we get a big round ball of points. So, that knocks out one more answer, leaving (d).

- Q82 This picture is Figure 1.16 on p. 71 of FFSI, and is discussed on pp. 68-73. It says there that there are many more observations in the tails than we would expect in a normal distribution. We saw many such observations during the 2020:Q1 covid panic. Thus (a) is the only correct answer.

- Q83 (see alsoQ45) The question is about whether the t-statistic for the mean is valid or not. The Student t-statistic for the mean has three assumptions: the data are normally distributed, the data are statistically independent of each other, and the data are identically distributed (so their parameters are stable). If any one of these assumptions does not hold, then you have to ask yourself if the test is valid. We get lucky in the case of non-normally distributed data. As long as you have a large enough sample, and the other two assumptions are not violated, then I can prove (using a central limit theorem, and Tchebychev's inequality and Slutsky's theorem) that the Student t-statistic for the mean is still a valid test. This is discussed on pp. 104-106 of FFSI. So, how large is large enough? It turns out that about N=100 is enough in large-cap stocks and N=200 is large enough in most small-cap stocks. We have N=750 in this question, and no other violations of assumptions. So, the test is valid. Answer (c)

- Q86 A student asked why is N=20 and not N=40. It is because each observation here is a pair. Imagine plotting these points on an X-Y graph to see how close they are to a straight line (which we did often). Each point requires a pair (R(IBM), R(MSFT)). So, you end up with only 20 points. This N=20.

- Q87 Q3.2.2 (Active Alpha Optimization) appears on p. 254 of the Q&A book. See also Q238 which includes a plot of similar data. See also top panel of Figure 1.18 on p. 88 of FFSI (relationship not quite as strong because 500 stocks obscures it a little; stronger results hold in NZ data with fewer stocks).

- Q93 Average dividend yield over the 50-year S&P500 sample was roughly 3.1% (This is exactly the same number as the average dividend yield in a 21-stock sample from the NZX I used in class in 2020; although average NZ dividend yield is usually higher than average US dividend yield, these samples are from different time periods, and average US dividend yields have fallen by 2020 to roughly 2%, and by 2021 to roughly 1.6%). The 3% number is discussed on p. 9 of FFSI. It is a standard number that you should have in your head, but you should be aware of where we are now also.

- Q96 The Spearman rank-order correlation coefficient (SROCC) is strongly negative. The SROCC detects relationships that are generally monotonic. That is, generally upward sloping, or generally downward sloping. In this case, we know that the relationship is generally downward sloping and strongly so. That is all we know. It could be linear, it could be non-linear. We do not have any additional information to help tell us whether the relationship is linear or non-linear. Also, the relationship is strong, not weak. So, each of answers (a), (b), (c), and (d) is false. If this question had said that the traditional Pearson product moment correlation coefficient (PPMCC) was equal to -0.91, then we would know that the relationship was strongly negative and linear, but that is not what we are told here.

- Q98 (see also Q107) This is about OLS and about R^2 just being the correlation squared. Let me review this concept using another important item as an exemplar:

PLOT A* is given here:

PE-GREPS-OEX-2019.pdf. It shows P/E ratio on one axis and analyst estimates of future growth rate in earnings per share on the other (let me call that growth rate "g(EPS)"). The data are the sub-sample of the 100 largest stocks from the US S&P500 index (called the S&P100, or the "OEX"). I argue that the higher the forecast g(EPS), the higher the P/E, and that in theory the slope of the relationship should be about 1, which is what I find. Look at the OLS R^2 of 44%. Can you tell me the correlation between P/E and g(EPS)?

Well, in the old days, correlation was always denoted "r" or "R". I think because it was originally called co-relation, and the r stood out more than it does now. Nowadays, instead of the Latin letter r we usually represent correlation using the Greek equivalent rho, which looks a bit like a little p with its tail off to the right a little. It is the same letter, pronounced as an r, but written with a different symbol in Greek.

To cut a long story short, R^2 is just r squared. In other words, if the R^2 is 44%, get your calculator out and square root it to find that the r (or rho, or correlation) is sqrt(.44) which is 66%.

...but there is a catch. You know how sqrt(4) can be +2 or -2? Well the square root of the R^2 also has a sign. The sign is the sign of the slope of the OLS regression. In my P/E vs g(EPS) case, the slope is positive. So, the correlation is +66%. ...but if that line had been downward sloping, the correlation would have been -66%. Note, of course, that both +66% and -66%, when squared, give an R^2 of 44%.

Incidentally, 44% is unusually large for an R^2 in finance. So, that's a good fit.

Digest this, then go and try Q98 and Q107 again, with your calculator in your hand and awareness of slopes. If you know R^2 = 95%, and you know the slope is positive, then the correlation is positive, but if you do not know the sign of the slope, then you are stuck. You don't know whether the correlation is positive or negative.

*PLOT A This OLS line of best fit shows that P/E and forecast g(EPS) are related. So, thinking about P/E as a reflection of forecast growth rates in EPS is justified. R^2=44% implies correlation 66%. [Details: Plot drawn May 5, 2019 showing Bloomberg's BEst_PE_RATIO versus BEST_EST_LONG_TERM_GROWTH for the OEX (S&P100) stocks, where BEST_EST_LONG_TERM_GROWTH is described thus "Long Term Growth Forecasts are received directly from contributing analysts, they are not calculated by BEst. While different analysts apply different methodologies, the Long Term Growth Forecast generally represents an expected annual increase in operating earnings per share over the company's next full business cycle. In general, these forecasts refer to a period of between three to five years."]

- Q99 On Monday I spend $10,000: $6,000 to buy 5 shares of PCLN and $4,000 to buy 1,000 shares of CHK. Those counts of shares are very important here.

On Tuesday, PCLN goes up $30 a share (to $1,230) and CHK goes down $0.25 per share (to $3.75). My portfolio is now worth 5*1230 + 1000*3.75=6150+3750=$9,900.

On Wednesday, PCLN goes up $30 a share (to $1,260) and CHK goes down $0.25 per share (to $3.50). My portfolio is now worth 5*1260 + 1000*3.50=6300+3500=$9,800.

So, from Tuesday to Wednesday, the value of my portfolio goes from $9,900 to $9,800. The return is (final-initial)/initial = (9800-9900)/9900=-0.010101, answer (a).

For me, the key was counting the number of shares. There are other ways to do it. You might have noticed that you gained $150 on PCLN and lost $250 on CHK, giving a loss of $100 on the first day. Similarly, there was a loss of $100 on the second day. So, they you could say your $10000 went to 9900 and then 9800, and then find the return.

- Q100 I particularly like this one, because I published a paper on it with a hedge fund manager. Remember that we discussed t-statistics for the mean in that detailed Section 1.3.16, based on several assumptions. The next section, 1.3.17 asked what if the assumptions don't hold. That's important, because in finance the assumptions often do not hold! There are three assumptions: normality of returns (we know that's not true!), independence of returns, and identical distributions (i.e., stable mean and variance through time). I discussed violations of these assumptions one at a time on pp. 104-108. On p. 107 I say that based on Crack and Ledoit (2010), if the only violation is that you have autocorrelation rho, then all you have to do to correct for this violation of the assumptions of the t-test is to multiply your regular t-statistic by (1-rho). So, in this case, we would get the corrected t-stat = t*(1-rho) = 2.4 * (1-0.25)=1.8, answer (b). In this case, the t-stat goes from being significant to insignificant, and so it really matters.

Here also is my answer to the very similar 2020MTQ23. There is a formula for this (and you can jump down to the bottom of this fat paragraph to see it, but the details are important). Just after we discussed the construction of the t-test of the mean (Section 1.3.16), we discussed (on pp. 104-108 of FFSI) robustness to the three assumptions: normality, independence, identically distributed. On pp. 106-107 we discuss what happens if the independence assumption is violated. The presence of auto-correlation is a violation of the independence assumption because it says that successive returns are statistically related to each other. Near the bottom of p. 106 there is a formula with a "*" beside it. It involves a covariance term, and on the next line the covariance is written as a correlation*stdev1*stdev2. The usual formula for the t-statistic assumes that this correlation between successive returns is zero. So, these cross-product correlation terms do not usually appear. If, however, there is auto-correlation of -25% (as there is in this question) then once you take these (in this case negative) terms into account, the revised/corrected standard error for the t-statistic gets smaller (i.e., it is decreased by these negative terms). The standard error sits in the denominator of the t-statistic (see Equation 1.40 on p. 92). So, if this gets smaller, then the t-statistic as whole gets bigger. It is a very complex calculation, but I published a paper in 2010 with hedge fund manager, mentioned in the middle of p. 107, that argues that a very good approximation to the complex calculation is to simply multiply the original t-statistic by (1-rho), where rho is the auto-correlation. In this case, the revised t-statistic, that accounts for the auto-correlation is given by t*(1-rho)=1.80*(1-(-0.25))=1.80*1.25=2.25, answer (d). If the auto-correlation were positive, then the revised t-statistic would instead get smaller by factor (1-rho), because of a larger standard error.

- Q103 Look at the bottom of p. 5 of FFSI. You will see that over the 50-year period, $1 grew to $108.45 in stocks, but only $13.25 in T-bills. The ratio is mentioned there as being close to 8.

- Q107 (see also Q98).

- Q111 In Chapter 1 we emphasized that you only needed to have perfect foresight 1% of the time to add 1% to the annual return over that 50-year period (which then adds half as much again to your ending wealth). This means you need only be perfectly insightful 1 trading day out of every 100 trading days. There are about 21 trading days a month, so that is one day out of every five months. This is discussed on p. 8 of FFSI. [One of the reasons this matters is that if it really takes so little skill to add 1% per annum, we need to ask why so called "skilled" managers underperform their benchmarks so much of the time. We will see they do terribly over the long run when we discuss the SPIVA results in Section 2.14.8.]

- Q112 This has two diamonds on it because most students do not take the time to look at the basic relationship between correlation, beta, and R^2. Also, we tend not to teach this in stats classes, even though it is not difficult. This is similar to Q33 on the 2020 Otago University FINC302 mid-term exam (Q148/MTQ33). The hint given in Q148/MTQ33 is to look at the formula for beta and the formula for rho (correlation). If you compare the two formulae, you will see that they are every close. The simple relationships are corr(X,Y)=cov(X,Y)/[std(X)std(Y)], beta(X,Y)=cov(X,Y)/[std(X)std(X)] (beta of Y relative to reference X), and R^2(X,Y)=corr(X,Y)^2. So, if std(X)=std(Y), then beta(X,Y)=corr(X,Y) because the denominators are the same. In our case, we are regression X=R(t) on Y=R(t-1), so std(X)=std(Y) almost perfectly, especially in a large sample, so we immediately get that (a), (b), and (c) are true. Answer (d) is true by definition in this case because they are the same thing. That just leaves answer (e) as the false answer. This is something you can test using data. Just go and grab some returns data with no missing observations, and estimate each.

- Q113 See answer to Q63 and also see the spreadsheet

Q63-Q113-20200610-REVISED.xlsx.

(hit F9 a few times to watch the simulation).

- Q127 The short answer is: multiply the t-stat for the mean by (1-rho) where rho is the auto-correlation. It is discussed on p. 107 of FFSI, in the middle of Section 1.3.17 ("How Robust is the t-Statistic for the Mean?").

The longer answer is: after the lengthy FFSI Section 1.3.16 on building a t-test to test the mean based on the assumptions that the data are distributed normality, independent and identically distributed, the following Section 1.3.17 asks, one at a time, what if these assumptions do not hold?

On p. 106/107 it says that if the only problem is a breach of dependence in the form of auto-correlation rho not being zero, then I published a paper in 2010 with a (now former) hedge fund manger (Dr. Olivier Ledoit) that shows that you just multiple the t-stat by (1-rho).

In this particular case t=2.2, rho=-0.15, so adjusted t = t*(1-rho)=2.2*(1-(-0.15))=2.2*1.15=2.53, which is even more significant.

There is a deeper (and slightly difficult) discussion of intuition in the middle of pp. 106-107 that argues that the usual t-stat has a standard error (in the denominator) that assumes that the variance of the sum is the sum of the variances. In the case of negative auto-correlation, however, a whole bunch of negative cross-product terms arise that are being ignored in the usual standard error calculation (I see a rho term appearing in the step after the asterisk on p. 106 in a simple two-observation case). So, the genuine standard error (accounting for the rho terms) is smaller than what the usual calculation would give (which assumes that these cross-product terms are zero). So, we need to inflate the t-statistic in this case. 30 pages of PhD-level algebra later, it turns out that just multiplying the t-stat by (1-rho) does the job.

- Q119 Please see the spreadsheet

http://www.foundationsforscientificinvesting.com/normal-pdf-discretization-2021.xlsx.

- Q130 A student asked "How are we able to calculate the t-stat when we donít have information on the standard deviation of the data? Do you simply use Mean/(sqrt(1/n))?" The answer is twofold. First, no, that sqrt(1/n) is an estimate of the standard error for the correlation coefficient in the case where N is large and correlation rho is small (Equation 1.44 in FFSI; see Q10 above). Second, you are supposed to know the standard deviation of these data because the number is roughly the same for broad market indices in the US, UK, NZ, Japan, Germany, Australia, etc. This missing number is the number you always have in your head when you look at the daily return on a broad market index, to judge whether today's move is significant or not. It is 1%, as discussed in the second sentence of Section 1.3.11 on kurtosis. See Q52 and Q256 which use the same knowledge.

- Q134/2020MTQ22 This is based on pp. 80-83 of FFSI. This is a simplified version of the more complicated Q63 and Q113 in the Q&A book. See the spreadsheet I built to go with the solutions to Q63 and Q113: Q63-Q113-20200610-REVISED.xlsx

and hit F9 a few times to watch the simulation. The spreadsheet is also a wonderful opportunity to see the Merton Brownian motion random walk process in action: I used Equation 2.2 (discrete-time geometric Brownian motion RW) to generate the continuously compounded returns (CCR) r(n)=mu*tau+sigma*sqrt(tau)*z(n) and then I used P(n+1)=P(n)*exp(r(n)) to generate my simulated prices. With correlation questions like this, always go back to basics. The correlation is the covariance divided by a product of standard deviations, like in Equation 1.41 on p. 93 of FFSI. So, the correlation takes its sign from the covariance in the numerator. So, it comes down to the sign of the covariance. Now go back to basics again. The covariance is given by Equation 1.36 on p. 84 of FFSI. It is a product of moments: E[(X-mean)(Y-mean)]. So, the sign of the covariance is determined by whether the two terms (X-mean) and (Y-mean) have the same sign or different sign. If both X and Y tend to be above their means at the same time, and below their means at the same time, then you get positive*positive and negative*negative, both of which yield a positive covariance and thus a strong positive correlation. If, however, X tends to be above its mean when Y is below its mean, and vice versa, then you get positive*negative and negative*positive, both of which yield a negative covariance and thus a strong negative correlation. In 2020MTQ22, I told my students that FPH rose in price slowly and steadily by 34% and FBU fell slowly and steadily by 33%. Qualitatively, the plot must look like the red and the green plots in my spreadsheet simulation. The prices must be strongly negatively correlated, because in the first half (more or less) of the plot {P(FPH)-mean[P(FPH)]} is negative but {P(FBU)-mean[P(FBU)]} is positive. In the second half (more of less) of the plot {P(FPH)-mean[P(FPH)]} is positive but {P(FBU)-mean[P(FBU)]} is negative. So, in the first half of the plot the product of moments is mostly negative, and in the second half of the plot, the product of moments is almost mostly negative. There is only one answer here with a strong negative correlation: Answer(a). Unlike the questions in the Q&A book, I did not give enough other information here to deduce the correlation of returns, but you do not need it to pinpoint an answer.

- Q138/2020MTQ10 You should not be taking any analytical derivatives. You may have practiced calculating numerical derivatives using a given table of values. It was done that way in FFSI (pp. 33-35), and in several questions in the Q&A Book (e.g., Q6, Q12). There is, however, no table here. That's OK, because all you need is two values of the function, evaluated very close together. Those values could come from a table. Without a table, you can use the given function to build your own table. Of course, you only need two rows in the table, let's say at x=10, and x=10.001. So, let me find

slope=[f(x+h)-f(x)]/h

= [f(10.001)-f(10.0)]/0.001

= [log(sqrt(10.001)*e^{sqrt(10.001)})- log(sqrt(10)*e^{sqrt(10)}) ]/0.001

=[4.3137783-4.3135702]/0.001=0.208105, answer (f).

The step size h does not have to be 0.001, it just seemed like a good small number in this case. For example, you could try 0.01 or 0.0001, and the answer should still be about the same. That formula slope = [f(x+h)-f(x)]/h is the definition of slope. (Well, technically, the definition is to use this formula and let h go to an infinitesimally small value, but we are just approximating this with a small h.)

When you worked on Q3.2.2 (Active Alpha Optimization; p. 254 Q&A Book), Excel's Solver was doing exactly this. It was calculating changes in the value of the objective function for a small step in each of the 21 choice variables, and then moving your choice variables in a direction that was up hill, but subject to the constraints.

- Q140/2020MTQ5 This follows on from our Chapter 1 Section 1.3.13 true diversification discussion. One student asked if it is "none of the above," then what we should add to a stock portfolio to obtain true diversification benefits? Several ideas come to mind: a small holding in high-yield bonds via a high-yield bond fund (also known as junk bonds; they have lower creditworthiness and higher yields than investment-grade bonds, and can be more stock like than investment grade bonds); commercial real estate (either directly or through an investment fund); residential real estate (either directly or through an investment fund); exposure to some commodities (e.g., there is evidence that a small gold exposure is risky enough to diversify a stock portfolio and improve Sharpe ratios); some would say a small cryptocurrency holding, but I think this is not sensible; ownership in a private company (either directly, or via a fund); a small hedge-fund exposure (perhaps through a fund of funds); ownership of your own small business, etc.

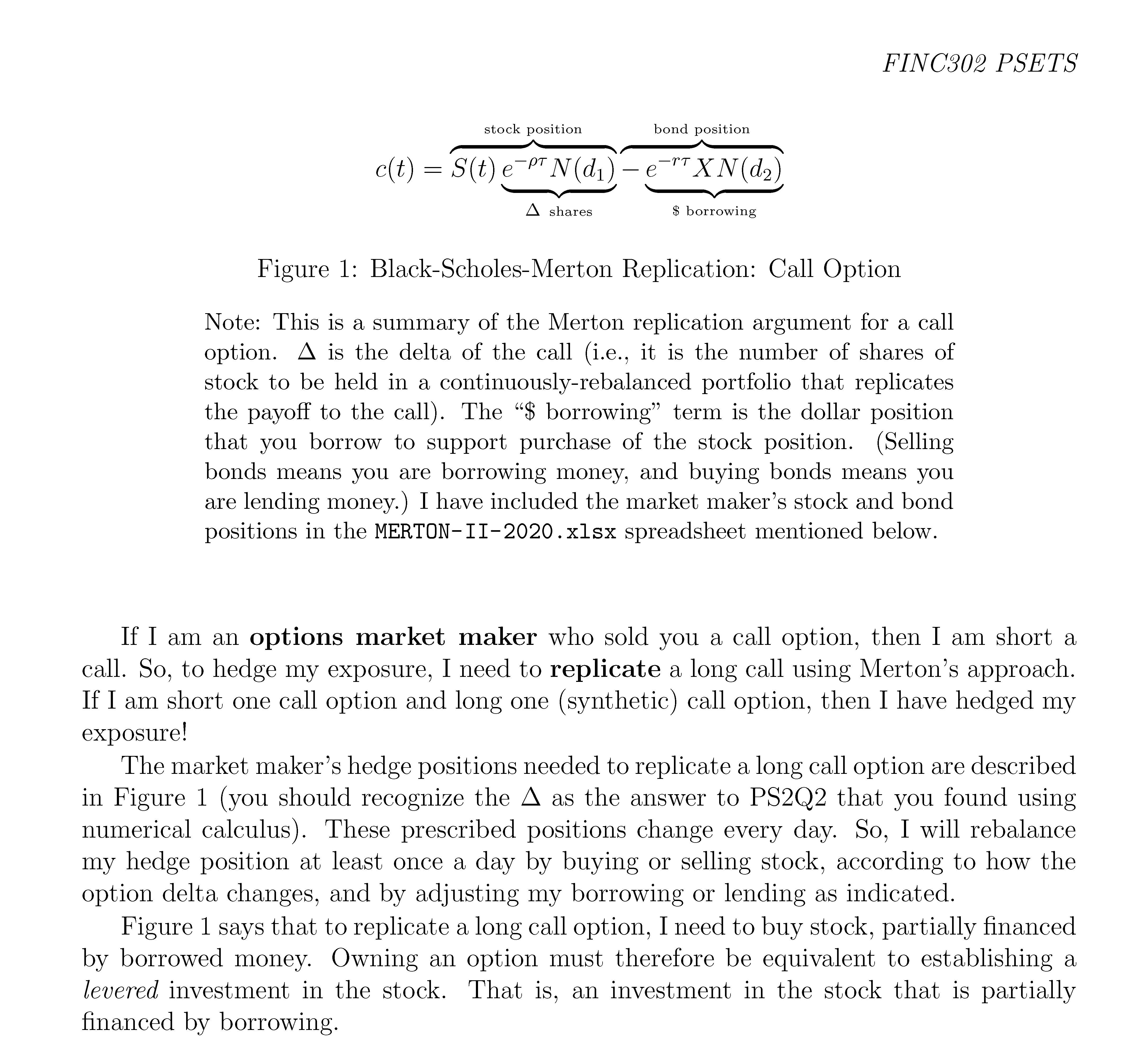

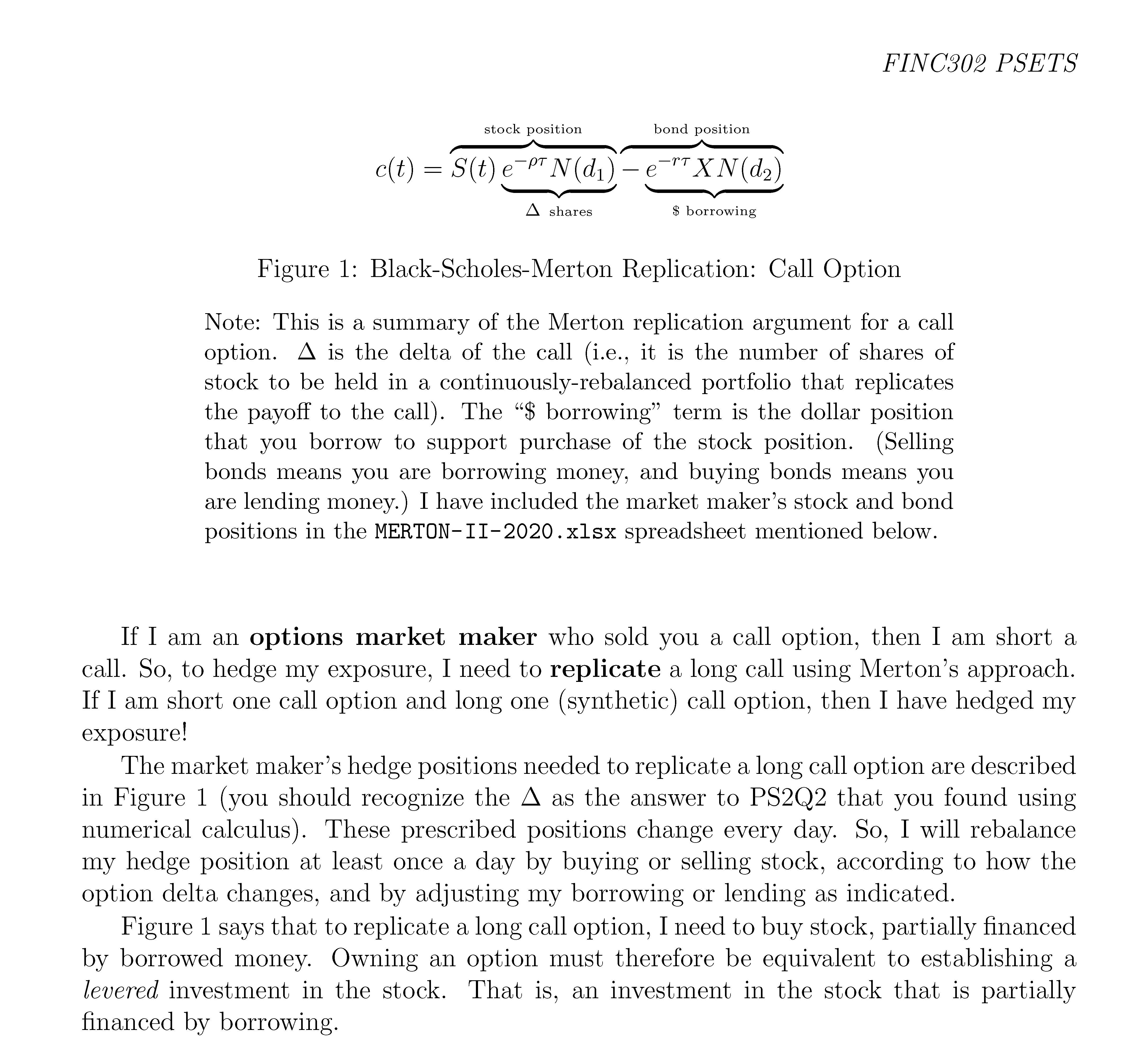

- Q142/2020MTQ9 The CAPM equation is given: E(R)=RF+beta*[E(RM)-RF]. We need to put in the inputs and see which range of possible answers the E(R) number we get falls into. Assume at first that I do not recall the actual precise numbers. Before I do anything, my gut instinct is that the long-term rate of the return to the market portfolio (using equity only) is something like 9% or 10%. That is what I would get if my stock had a beta of 1. So, if I have a stock with a beta of 1.2, I expect a slightly higher number. That extra .2 in the beta is applied only to the market risk premium, so now I am tilting towards Answer (c), because I think that adds only about 1% to my previous 9% or 10% number. Now let me use the actual numbers (p. 7 of FFSI): RF=0.053, MRP=4.53%, CAPM yields 10.736%. These all put the answer in the range in Answer (c).

- Q148/2020MTQ33 Follow the hint! Look at the equation for beta and the equation for rho (i.e., correlation). They differ in only one term. All you need is std(x)=std(y), or approximately so to get beta=rho. In cases (a) and (b) these two equations give basically the same answer, so beta = rho, more or less, in both cases.

- Q150/2020MTQ26 I often asked my students to execute exactly this test. The statistic STAT = Zskew2 + Zkurt2 is the sum of two independent standard normal random variables (each is described on p. 58 of FFSI). So, by the definition of a chi-squared random variable (e.g., p. 59 of FFSI) it must be distributed chi-squared with 2 degrees of freedom. So, I am leaning towards (a), (b), or (c) already. These Z-stats are zero when the data are normally distributed, and non-zero otherwise. Because both Z stats are squared, the only way to get a rejection is if the statistic falls into the upper tail of the distribution (chi-squared tests typically use only the upper tail, even if the alternative hypothesis is two-sided). So, it must be answer (b). You can use the Excel sheet (MONTE-CARLO-EXERCISES-2020.xlsx) to simulate a chi-square with 2 degrees of freedom, so that you could see roughly where the upper critical 5% value should be (it is at about STAT=6).

- Q154/2020MTQ13 is about non-normality of returns. Discussed in great detail pp. 68-73 of FFSI. There are too many tail events and too many calm days for the distribution to be normally distributed.

- Q158/2020MTQ24 The form of this F-test is simply a ratio of sample variances. Students are often mistakenly tempted to put "N1" and "N2" into the test statistic. This error comes from a lack of understanding of the underlying intuition. This F-test is described in the box on p. 103 of FFSI. It builds upon the intuition we discussed for the chi-squared distribution (leading into the derivation of the t-statistic) and for the F-distribution. In 35+ years of reading stats books, I have not found a simpler explanation than this one.

I do find that it helps to look at simulations in spreadsheets. For example, these simulations for the chi-squared and t-distribution: MONTE-CARLO-EXERCISES-2020.xlsx. Can you amend my sheet to do the same for an F-distribution?

So, if these arguments do not help, then perhaps you just need to remember that the F-test for differences in dispersion is just the ratio of the sample variances. Nevertheless, please do ask if anything in particular is unclear. I will do my best to improve it.

- Q159/2020MTQ25 Answers (c) and (d) are the two scenarios under which the t-stat of the mean is invalid. The question asks when the traditional t-statistic for the mean is invalid. Note that in case (c) the traditional t-statistic for the mean is invalid because of dependence. (Yes, in case (c) you can adjust it as described on p. 107 of FFSI, but then you no longer have the traditional t-statistic for the mean.)

- Q161/Q133 (see also Q425) In this question, the T-costs are the (ask price - mid-spread price)*quantity + commission. So, that is ($6.34-$6.295)*100 + $30 = $34.5. Let me add some additional details: The total cost of the stock, including all T-costs, is (ask price)*quantity + commission = $6.34*100 + $30=$634 + $30 = $664. The fair value of the stock, however, was only (mid-spread price)*quantity = $6.295*100 = $629.50, where $6.295 is the mid-spread price. The difference between these two numbers is the answer to the question asked.

[Follow up response to further questions: If the ask price is $6.34, and you buy 100 shares, then you pay $6.34, and that includes the T-cost of the half-spread above fair value. The half-spread cost that built into this stock price is unavoidable. The $6.34 price tag of the stock includes the underlying T-cost of the spread that results from the way the NZX does business.

In the morning and the afternoon, the bid-ask spreads tend to be wider, even if fair value does not change.

The $6.34 price tag does not, however, include the commission you pay to your broker to let you bring your trade to the NZX.

Analogy Suppose the fair value of a can of baked beans is $1. If you buy the beans at the supermarket, maybe the price tag is $1.20. If you buy the beans at the corner dairy, however, then maybe the price tag is $1.50. The fair value of the beans is the same in both cases, but if you buy the beans at the corner dairy, then they pass their higher T-costs on to you in the form of a higher price tag. If you have to take the bus to go to the store to buy the beans, then the price of the bus ticket is added to your T-costs of buying groceries, but it is not displayed on the price tag of the beans in the store.

The $1 fair value of the beans is like the mid-spread value. The $1.20 price is like a middle-of the day ask price. The $1.50 price is a like an early morning or late afternoon ask price. The bus ticket price is like the commission.]

- Q162/Q134 This is one of my favorite parts of the course because it is both very simple to explain and very applied to you in your personal investing. Let R be the return before fees. Let e be the expense ratio (that is, the fee) per annum. So R=0.0800 and e=0.0005 here. Then the net return on the fund is R-e=0.0795, because the fee is subtracted each year.

Then without the fee (i.e., gross): FV=$10,000*(1+R)^N=10000*1.0800^10=$21,589.25

With the fee (i.e., net): FV=$10,000*(1+R-e)^N=10000*1.0795^10=21,489.508

The difference is about $100, answer (c).

Jack Bogle loved this sort of calculation because now try it with e=1.25%, the typical fee for an actively managed fund. It is a simple argument for buying low-fee funds, as discussed in Section 2.4.7.

- Q167/Q139 We often focus on KiwiSaver rules and regulations.

- Q168/Q140 Let me reassure you first that I am not going to ask you any exam questions this year about stock splits. There has been a dramatic reduction in their use in the last five to ten years. This must be an old question. A stock split is a simple idea. Suppose you bought a $10 share of stock and the company did really well and the stock price went to $30 a share. The company might declare a "three-for-one" stock split. They take back your $30 share and give you three newly issued ones worth $10 each. Economically, you have the same value stock ($30) but the count of shares you have increased. Let us work it out.

Assume that you buy one share at the ask on Monday (1080). It looks like four-for-one split took place (which you correctly guessed). This means that walking into Friday you have 4 shares. Each share pays you a dividend of 25, and can be sold for 225 (the bid on Friday).

So, return = (final-initial+dividends)/initial = (4*225-1080+4*25)/1080=-80/1080=-7.4%, answer (c).

I think you will see the logic. I am guessing that you did not collect 4 dividends when you did it. Easy to miss.

- Q172/Q144 (see also Q215/Q187, Q310/Q282) Each of answers (a), (b), and (c) is a correct statement, but (c) is not relevant to the question (Answer (c) might as well say that "the sky is blue on a sunny day"; true, but not relevant here).

- Answer (a) is correct, because most investors realize that in order to build wealth over the long run, they must take exposure to risky assets like stocks. So, they are resigned to the fact that they must be in stocks. That's an unchanging given, and so is not part of our decision making process. That's why we can remove benchmark return and risk from our objective function. After that, we ask the question "Do they want us, as portfolio managers, to step away from that benchmark?" Our clients are much more fearful of us doing this, using stock selection or benchmark timing, than they are of us just passively following the benchmark. Ultimately the answer is that we take on active risk only if the active return outweighs the risk taken, and we have the client's permission to do so.

- Answer (b) is really just a mathematical expression of the same statement made in Answer (a). That is, Answer (a) and Answer (b) are saying the same thing, and they are the reason why we can remove benchmark return and risk from our objective function.

- Answer (c) is about whether, when an active portfolio manager steps away from the benchmark, they should engage in benchmark timing. The statement is correct (most institutional asset mangers, that is asset managers managing money on behalf of other institutions, do not engage in benchmark timing), but Answer (c) is about stepping away from the benchmark, while the question is about the underlying benchmark itself. So, Answer (c), although a correct statement, does not answer the questions. Note that there are many asset managers who engage in benchmark timing, but for asset managers managing money for other institutions it is a minority.

- Thus, Answer (e) is correct.

- Q173/Q145As discussed in FFSI on p. 312, you need T>=N for the VCV to be invertible. Three months of data is about T=3*21=63 observations. If N=100 stock, then the VCV is not invertible.

- Q175/Q147 FLAM says IR=IC*SQRT(BREADTH). If you multiply the BREADTH by 2, then, because the BREADTH appears under the square-root sign, the IR increases by a multiplicative factor of SQRT(2). So, IR increases by about 40%.

- Q178/Q150The consequences of a margin trade going against you appear in Sections 2.4.5 and 4.3.2 of FFSI.

- Q179/Q151 Betas relative to T are perfectly related to returns, like in the CAPM. So, if the stock returns (for TEL, FPH, PPG) are less than RF, then they must have negative betas, like in the CAPM.

- Q181/Q153 Answer (c) is not correct because empirical evidence I have seen suggests that on small U.S. stock orders, ECNs and the NYSE have roughly the same costs, and in large orders, the NYSE has a cost advantage. This topic needs to be revised in the next edition.

- Q182/Q154 Dividend time lines appear in Section 2.5.1 of FFSI.

- Q183/Q155 It is a market order to buy 100,000 shares. So, the first thing to do is look and see if anyone is offering that many shares. Yes, I see 75,000 offered at 387, 10,000 at 388, 10,000 and 389, and we will need 5,000 out of those offered at 390 (all in cents per share). So, total cost is the sum product of those: (75,000*$3.87)+(10,000*$3.88)+(10,000*$3.89)+(5,000*$3.90)=$387,450. There were 100,000 shares purchases, so the average price is $387,450/100,000=$387.45 or 387.45 cents per share. We usually quote everything in dollars now, but the principle is the same.

- Q185/Q157 This is about order precedence.

In (a), the limit order will sit in line behind the 75,000 shares already there, whereas the market order is immediately executable, so that is false.

In (b), the market order is immediately executable and the limit order will wait in line, so that is true.

In (c), the limit order is marketable, like the market order. So that is true.

Hence answer (e) is correct.

It is not that market orders are executed more quickly than limit orders, but that marketable limit orders and market orders (assuming sufficient depth) are both immediately executable, and limit orders that arrive and have to wait in line behind other orders are not immediately executable.

- Q186/Q158 Fund fees appear Section 2.4.7.

- Q187/Q159If you sold an option on one share, then you trade delta shares of stock to hedge it. If you sold an option on N shares, then you trade N*delta shares of stock to hedge it. If delta is positive, then you buy stock to hedge. If delta is negative, then you sell stock to hedge.

- Q188/Q160 We have not dealt with this exactly, but we have dealt with many parts:

You are buying, so you hit the ask: $0.95.

This is a quote per share. The contract covers 100 shares. So the option price is $95 for one contract.

The commission is a flat $10 plus a $0.75 per contract fee. You buy one contract only, so you pay $10.75 commission. Add that to the option price to get $105.75.

OK? We have not done protective puts, though, arguably, we should have.

- Q191/Q163 Let me use simple net return (SNR) =(final-initial+dividend)/initial.

SNR(1)=(430-455+30)/455=5/455=0.010989

SNR(2)=(425-430+0)/430=-5/430=-0.016279, giving answer (a). The important part is knowing where the dividend goes. This has not been emphasized in this context, but the SNR formula is one you should know.

- Q194/Q166 From Q3.2.2 (Active Alpha Optimization; p. 254 Q&A Book), the key is that the IR=alpha/omega, and the omega appears in the middle term of the objective function Equation 2.81 p. 290. That is, omega^2=sigmaP^2-sigmaB^2. So, you need IR=alpha/omega, where alpha=0.04300 is given to you, and omega^2=sigmaP^2-sigmaB^2 = 0.1240^2-0.1063^2. So, IR=alpha/omega=0.04300/[sqrt{ 0.1240^2-0.1063^2 }] = 0.04300/0.0638459 = 0.6734957, answer (c). In this question you were supposed to spot that the entire setup looked like Q3.2.2 (Active Alpha Optimization; p. 254 Q&A Book): I gave you sigmaB and I gave you sigmaP and I told you that beta=1 (which means that omega^2=sigmaP^2-sigmaB^2) and you are calculating an IR like in Q3.2.2 (Active Alpha Optimization; p. 254 Q&A Book). So, there were lots of cues and clues.

- Q203/Q175If you are uninformed, then the price rise is temporary only, and these higher prices are T-costs, and they are unambiguously bad for you (answer (b)). This higher price will be recorded as a transaction price that is disseminated online, but there is no information in it. In a liquid stock, the order book on the ask side will fill back in again in a minute or two. If you think this price rise is good for you, then you have to ask what is the next step? If the next step is to sell your stock, then at what price can you sell it? The answer is that it will be at the bid price, and if the order is large enough, price impact when you sell means that you will walk into the CLOB and sell some of your stock at prices even worse than the bid price. This means that every price you sell (from the bid price down) is lower than every price you bought at (from the ask price up). This is not good for you. When I mark to market (that is, when I value my stock holdings), I always use the bid price. Even that may be optimistic, given possible market impact. Your trade did not move the bid price, so you did not change the price you can sell your stock at, so there is nothing good in this price impact.

- Q207/Q179This is the FLAM IR=IC*sqrt(BR). We mentioned this on p. 304 of FFSI. BR is breadth = number of stocks you follow * number of independent forecasts of return you make on them per annum. If we multiply BR by 1/2 (by halving the count of forecasts), then that half gets square rooted, and sqrt(1/2)=0.7071 approx 0.71. Conversely, if we doubled the breadth, then the BR would go up by a factor of 2, and so the IR would go up by a factor of sqrt(2). Do you see? Ask again if not.

- Q208/Q180 The correct answer is that the standard error of the estimator of the mean is unchanged.

- Q211/Q183 (see also Q232/Q204) Active anything (return, portfolio weights, risk, beta) is always portfolio quantity less benchmark quantity:

active return=RP-RB

active weights=hP-hB

active risk=sigmaP^2-sigmaB^2

active beta=betaP-betaB=betaP-1

So, it is answer (e), none of the above, because the two correct answers are not given together.

- Q214/Q186 IR=alpha/omega, where omega is active risk. She needs to generate enough alpha to deduct expenses and fees of 100 bps and still have 50 bps left over for the client. So, she needs an alpha of 150 bps. With an IR of 0.60, she needs an omega of 250 bps. Think of this as walking up the budget constraint in Figure 2.17 (p. 296) until the omega is high enough to generate a target alpha.

- Q215/Q187 (See also Q172/Q144 and Q310/Q282 on similar topics). Each of answers (a), (b), and (c) is a factually correct statement, but only answer (a) answers the question posed. The question is about benchmark return and risk, and why we dropped them from our objective function (see bottom p. 290 of FFSI). Answer (b) is, however, about benchmark timing, that is stepping away from the benchmark with a beta that is not equal to 1. Answer (c) is about aversion to active risk, that is, aversion to the risk engendered by stepping away from the benchmark. Whether this aversion is zero, low, medium, or high, we will still drop the benchmark return and risk terms from the objective function because they are not a function of our choice variables. Note that I don't think we used the phrase "maverick risk" this year, but it is defined in the question and the basic idea should be clear.

[Additional deeper details on the comparison of Q172/Q144 and Q215/Q187 which you do not need to read unless you are very interested: In Q172/Q144, answer (a) says that investors are resigned to facing the risk of the benchmark. That is, investors have accepted that although they fear benchmark risk, they must expose themselves to it over the long run, in order to earn the long-run equity market risk premium, in order to build wealth. As a consequence, we, as buy-side portfolio managers, have already won that sales pitch. Even Jack Bogle made that sales pitch successfully, and he was pushing passively managed funds and he was dead set against active portfolio management. So, the investors are already on the "invest in the stock market" roller coaster ride, and we can drop that term from our objective function. The remaining terms are concerned with active positions: benchmark timing and stock selection.

Now think about that active management. Well, in Q215/Q187, answer (c) says that investors fear maverick risk more than they fear benchmark risk. Yes, that is true. Many investors have no faith in our ability as fund managers to pick stocks or time the market. So, they have a much higher aversion to active risk than they do to passive benchmark risk. This is going to be a tougher sales pitch. So, in building those other components of our objective function, we need to use a higher risk aversion coefficient than we used for benchmark risk. You see Equation (2.75)? It has three lambdas in it. The fear of maverick risk means that lambdaBT (aversion to benchmark timing risk) and lambdaR (aversion to stock selection risk) are each higher than lambdaB (aversion to benchmark risk). So, this "maverick risk" aversion statement is a statement about the relative size of those three lambdas, not about whether that first benchmark return and risk term belongs in our ultimate objective function. If you really want to, you can leave the first term in Equation 2.75 (kappaB-lambdaB*sigmaB^2) in the objective function. You do not have to drop it off. It is just that it is not a function of our choice variables, so it is easier to drop it off. Whether it is there or not, however, those lambda risk aversion terms have to have the relative sizes mentioned, and that is what this maverick risk statement is about. It is not about the ability to discard the first term.

End of extra details.]

- Q219/Q191 For telephone orders, the broker charges 70 bps (0.70%) with a minimum fee of $35. It is a$12,000 trade, so the commission is $12,000*0.007=$84. That is above the minimum of $35.

- Q220/Q192 For internet orders, the broker charges 30 bps with a minimum of $30 commissions. 10,000 shares at $2.20 costs $22,000. $22,000 times 0.003 is $66. When I sell 1000 shares at $2.10 the value is only $2,100. Then $2,100x0.003 is only $6.30, but they say the minimum commission is $30. So they will charge me $30. This is an actual commission schedule from ASB Securities in NZ. So, the total is $96 commission.

- Q221/Q193 This goes back to discussion of passive long-term investing in Chapter 1. Remember we said that if you could 1% per annum to your investment over the 40 or 50 years until retirement (assuming you are 21), you add about half as much wealth again at retirement (i.e., +50% wealth)? Well 0.5% extra adds about 20%. You don't have to memorize these. Just ask, what if I used 9.5% instead of 9% to compound for 40 years. Let's test it with $1000. $1000*(1.09^40) is $31,409 what about at 9.5%?

Well, $1000*(1.095^40) is $37,719. Divide the second one by the first, and it is 20% bigger. Where did 9% come from? I made it up. Try 7% or 8% and it is almost the same ratio.

- Q226/Q198 On p. 190 of FFSI, a paragraph discusses P/CF ratios. I always mentally invert it to get CF/P. Then that looks like a return on investment. In my head I have 10% as the long-run return on the market. So, CF/P=10% is fair, higher is attractive, lower is unattractive, other things being equal. ...but then you have to invert back into P/CF. So, P/CF=10 is fair, P/CF lower than 10 is attractive, P/CF higher than 10 is unattractive, other things being equal. We have P/CF=2.99 here, so that's a CF/P=33%, which is great! Answer (a).